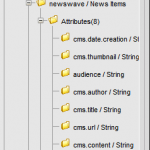

The next step was generating the query with DS Attributes. Nice thing about SQL is it doesn’t care about line breaks, so you can condense it down to one line. This is good as the XML engine in Delivery Server may not preserve these. I didn’t test conclusively, if you need white-space or formatting for say a REST call you will want to test thoroughly.

This is how I broke down the SQL to ‘templatize’ it for Delivery Server. Each line that needs Delivery Server variables is broken out.

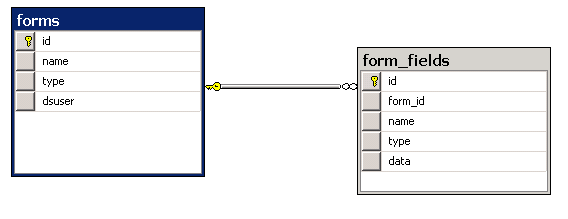

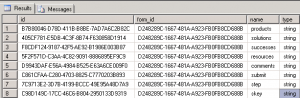

DECLARE @formID as varchar(50) DECLARE @InsertOutputForm table ( form_id varchar(50) );DECLARE @InsertOutputFormFields table( id varchar(50), form_id varchar(50), name varchar(50), type varchar(50)); INSERT INTO dbo.forms (name,type,dsuser) OUTPUT INSERTED.id as form_id INTO @InsertOutputForm

VALUES (N'partnerinfoform', N'form',N'cindy')

SET @formID=(SELECT form_id FROM @InsertOutputForm); INSERT INTO dbo.form_fields (form_id, name, type, data)OUTPUT INSERTED.id, INSERTED.form_id, INSERTED.name, INSERTED.type INTO @InsertOutputFormFields VALUES

(@formID, 'products', 'string', 'products'),

(@formID, 'solutions', 'string', 'solutions'),

(@formID, 'successes', 'string', 'successes'),

(@formID, 'resources', 'string', 'resources'),

(@formID, 'comments', 'string', 'test'),

(@formID, 'submit', 'string', ''),

(@formID, 'step', 'string', '430'),

(@formID, 'skey', 'string', 'SID-04000407-1F158E8D')

;

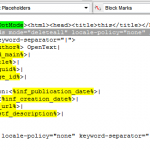

Next prepare to drop it into a DS XML file with pseudo inline attribute syntax.

DECLARE @formID as varchar(50) DECLARE @InsertOutputForm table ( form_id varchar(50) );DECLARE @InsertOutputFormFields table( id varchar(50), form_id varchar(50), name varchar(50), type varchar(50)); INSERT INTO dbo.forms (name,type,dsuser) OUTPUT INSERTED.id as form_id INTO @InsertOutputForm

VALUES (N'[#formname#partnerinfoform#]', N'[#formtype#form#]',N'[#rde-fields.user#]')

SET @formID=(SELECT form_id FROM @InsertOutputForm); INSERT INTO dbo.form_fields form_id, name, type, data)OUTPUT INSERTED.id, INSERTED.form_id, INSERTED.name, INSERTED.type INTO @InsertOutputFormFields VALUES

(@formID, '[#fieldname#]', '[#fieldtype#string#]', '[#data#]'),

;

<!-- Split Fields -->

<rde-dm:attribute mode="write" attribute="request:ft.ssv.fields" value="[#request:_sf_form_fieldnames_#].substring(1)" value-separator="|" />

<rde-dm:attribute mode="write" attribute="request:ft.query.segment1" value="DECLARE @formID as varchar(50) DECLARE @InsertOutputForm table ( form_id varchar(50) );DECLARE @InsertOutputFormFields table( id varchar(50), form_id varchar(50), name varchar(50), type varchar(50)); INSERT INTO dbo.forms (name,type,dsuser) OUTPUT INSERTED.id as form_id INTO @InsertOutputForm " />

<!-- replace pseudo code -->

<rde-dm:attribute mode="write" attribute="request:ft.query.segment2" value="VALUES (N'[#request:_sf_form_name_#partnerinfoform#]', N'[#request:formtype#form#]',N'[#user:rde-fields.login#anonymous#]') "/>

<rde-dm:attribute mode="write" attribute="request:ft.query.segment3" value="SET @formID=(SELECT form_id FROM @InsertOutputForm); INSERT INTO dbo.form_fields (form_id, name, type, data) OUTPUT INSERTED.id, INSERTED.form_id, INSERTED.name, INSERTED.type INTO @InsertOutputFormFields VALUES " />

<!-- Loop Over Fields -->

<rde-dm:attribute mode="for-each" attribute="request:ft.ssv.fields" alias="field" tag="fields">

<rde-dm:attribute mode="condition">

<rde-dm:constraint>context:field NE ""</rde-dm:constraint>

<field>

<!-- Get Field Name -->

<![CDATA[-]]><rde-dm:attribute mode="read" attribute="context:field"/><![CDATA[:]]><rde-dm:attribute mode="read" attribute="request:[#context:field#]" /><![CDATA[;<br/>]]>

<rde-dm:attribute mode="write" attribute="request:ft.query.segment4" value="[#request:ft.query.segment4#], (@formID, '[#context:field#].replace(';',', ')', '[#fieldtype#string#]', '[#request:[#context:field#badField#]#].replace(';',', ')')"/>

<rde-dm:attribute mode="write" attribute="request:rdb.columns" value="[#request:rdb.columns#], form_fields.[#context:field#]"/>

<rde-dm:attribute mode="write" attribute="request:rdb.values" value="[#request:rdb.values#],"[#request:[#context:field#badField#]#].replace(';',', ')""/>

</field>

</rde-dm:attribute>

</rde-dm:attribute>

<rde-dm:attribute mode="write" attribute="request:ft.rdb.formquery" value="[#request:ft.query.segment1#] [#request:ft.query.segment2#] [#request:ft.query.segment3#] [#request:ft.query.segment4#].trim().substring(1);" value-separator="" />

<![CDATA[<br/>]]>

<rde-dm:attribute mode="read" attribute="request:ft.rdb.formquery" /><![CDATA[<br/>]]>

<!-- TODO:

- sql query submit

- validate

- add date&time of submit

- set campaign step code = true

-

-->

<rde-dm:rdb mode="query" alias="otwsm_supplemental" sql="select count(*) as count from dbo.form_fields;"/><![CDATA[-pre count <br/>]]>

<rde-dm:rdb mode="update" alias="otwsm_supplemental" sql="[#request:ft.rdb.formquery#]"/>

<rde-dm:rdb mode="query" alias="otwsm_supplemental" sql="select count(*) as count from dbo.form_fields;"/><![CDATA[- post count<br/>]]>

Unfortunately I quickly learned I should have RTFM’d. I ran straight into a blocker.

Unexpected error occurs:This statement isn't allowed=DECLARE @formID as varchar(50) DECLARE @InsertOutputForm table ( form_id varchar(50) )

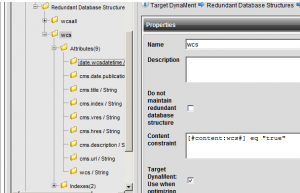

The RDB DynaMent limits the types of commands that can be executed. Thus I seemed out of luck. Except their is the statement mode which I don’t believe I’ve used before. It will let you run unrestricted SQL statements. If you are running simpler queries do use ‘query’ or ‘update’ to help prevent SQL Injection.

<!-- Split Fields -->

<rde-dm:attribute mode="write" attribute="request:ft.ssv.fields" value="[#request:_sf_form_fieldnames_#].substring(1)" value-separator="|" />

<rde-dm:attribute mode="write" attribute="request:ft.query.segment1" value="DECLARE @formID as varchar(50) DECLARE @InsertOutputForm table ( form_id varchar(50) );DECLARE @InsertOutputFormFields table( id varchar(50), form_id varchar(50), name varchar(50), type varchar(50)); INSERT INTO dbo.forms (name,type,dsuser) OUTPUT INSERTED.id as form_id INTO @InsertOutputForm " />

<!-- replace pseudo code -->

<rde-dm:attribute mode="write" attribute="request:ft.query.segment2" value="VALUES (N'[#request:_sf_form_name_#partnerinfoform#]', N'[#request:formtype#form#]',N'[#user:rde-fields.login#anonymous#]') "/>

<rde-dm:attribute mode="write" attribute="request:ft.query.segment3" value="SET @formID=(SELECT form_id FROM @InsertOutputForm); INSERT INTO dbo.form_fields (form_id, name, type, data) OUTPUT INSERTED.id, INSERTED.form_id, INSERTED.name, INSERTED.type INTO @InsertOutputFormFields VALUES " />

<!-- Loop Over Fields -->

<rde-dm:attribute mode="for-each" attribute="request:ft.ssv.fields" alias="field" tag="fields">

<rde-dm:attribute mode="condition">

<rde-dm:constraint>context:field NE ""</rde-dm:constraint>

<field>

<!-- Get Field Name -->

<![CDATA[-]]><rde-dm:attribute mode="read" attribute="context:field"/><![CDATA[:]]><rde-dm:attribute mode="read" attribute="request:[#context:field#]" /><![CDATA[;<br/>]]>

<rde-dm:attribute mode="write" attribute="request:ft.query.segment4" value="[#request:ft.query.segment4#], (@formID, '[#context:field#].replace(';',', ')', '[#fieldtype#string#]', '[#request:[#context:field#badField#]#].replace(';',', ')')"/>

<rde-dm:attribute mode="write" attribute="request:rdb.columns" value="[#request:rdb.columns#], form_fields.[#context:field#]"/>

<rde-dm:attribute mode="write" attribute="request:rdb.values" value="[#request:rdb.values#],"[#request:[#context:field#badField#]#].replace(';',', ')""/>

</field>

</rde-dm:attribute>

</rde-dm:attribute>

<rde-dm:attribute mode="write" attribute="request:ft.rdb.formquery" value="[#request:ft.query.segment1#] [#request:ft.query.segment2#] [#request:ft.query.segment3#] [#request:ft.query.segment4#].trim().substring(1);" value-separator="" />

<![CDATA[<br/>]]>

<rde-dm:attribute mode="read" attribute="request:ft.rdb.formquery" /><![CDATA[<br/>]]>

<rde-dm:rdb mode="query" alias="otwsm_supplemental" sql="select count(*) as count from dbo.form_fields;"/><![CDATA[-pre count <br/>]]>

<rde-dm:rdb mode="statement" alias="otwsm_supplemental" sql="[#request:ft.rdb.formquery#] SELECT id, form_id, name, type FROM @InsertOutputFormFields;"/>

<rde-dm:rdb mode="query" alias="otwsm_supplemental" sql="select count(*) as count from dbo.form_fields;"/><![CDATA[- post count<br/>]]>

<!-- TODO:

- add date&time of submit

-->

<rde-dm:attribute mode="read" attribute="request:step" /><![CDATA[-step<br/>]]>

<rde-dm:attribute mode="write" op="set" attribute="user:campaign.step[#request:step#]" value="true"/> Set to True

<rde-dm:attribute mode="read" attribute="user:campaign.step[#request:step#]" /><![CDATA[-step<br/>]]>

<rde-dm:attribute mode="condition">

<rde-dm:constraint>(request:redirect-target NE '') AND (request:debug NE 'true')</rde-dm:constraint>

Redirect Now!<!-- redirect somewhere -->

<rde-dm:process mode="redirect" type="http" url="http://10.25.0.51/demo/en/partner.htm" >

<rde-dm:include content="[#request:redirect-target#content/en/index.htm#]"/>

</rde-dm:process>

</rde-dm:attribute>

That is the working result of a form handler to work with the “universal” storage tables handling a SmartForm post.